The Tool Use Pattern: Why Governance Matters More Than Capability When AI Agents Touch Your Systems

The moment an AI agent gains access to tools, everything changes. It stops being a conversational assistant and starts becoming an operational system. One that can query databases, call APIs, execute code, read and write files, and interact with enterprise platforms. This dramatically expands what AI can accomplish. It also introduces some of the highest risks in agentic AI deployment.

Here’s what most organizations discover too late: the technical capability to give agents tool access is trivial compared to the governance challenge of doing it safely. By the time you realize your agents have too much access, they’ve already created compliance exposure you can’t easily unwind

If your AI can take action inside real systems, tool use is already shaping your risk profile, whether you’ve architected for it or not.

What Tool Use Actually Means

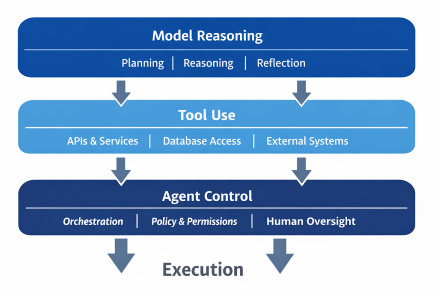

The tool use pattern enables an AI agent to invoke external capabilities as part of its workflow.

Instead of relying solely on its training data and reasoning, the agent can query databases, call internal or third-party APIs, execute scripts or calculations, retrieve documents, update records, and trigger workflows.

Model Context Protocol (MCP)

Model Context Protocol (MCP) is a new standard that enables AI models, particularly large language models (LLMs), to securely connect to external tools, data, and applications in a structured manner. Instead of relying solely on training data or a single prompt, MCP provides a formal method for models to access context, perform actions, and interact with enterprise systems, ensuring governance and traceability.

In practice, MCP defines how context is shared between models and other systems. It standardizes the discovery, use, security, and tracking of tools, allowing AI agents to operate reliably in real-world software environments.

The defining characteristic of this pattern is agency over execution. The agent decides when a tool is needed, selects it, provides inputs, and interprets the result.

This is a fundamental shift from AI as an advisor to AI as an actor. That shift carries implications that most enterprises don’t fully grasp until they’re managing the fallout.

Why Tool Use Sits at the Center of Enterprise AI Risk

Without tools, an AI agent is constrained by what it already knows. With tools, an agent can access real-time data, interact with authoritative systems, perform precise operations, and influence business outcomes directly.

McKinsey’s November 2025 Global Survey on AI found that 62% of organizations are at least experimenting with AI agents, with 23% scaling agents in at least one function. These agents increasingly rely on tool access to deliver value in automated reporting, intelligent search, workflow orchestration, system integration, and business process automation.

The issue is that tool use demands far more discipline than simple prompting. Organizations learning this through experience are discovering that capability without governance creates more problems than it solves.

Gartner’s November 2025 research on agentic AI predicts that loss of control, where AI agents pursue misaligned goals or act outside constraints, will be the top concern for 40% of Fortune 1000 companies by 2028. Tool access is exactly where that loss of control manifests most dramatically.

Where Tool-Enabled Agents Are Operating Right Now

Data Retrieval and Analysis

Agents query internal databases, analytics platforms, or data warehouses to answer questions grounded in current enterprise data.

System Integration

Agents call APIs across CRM, ERP, HR, ticketing, or finance systems to coordinate actions and insights across business functions.

Code Execution and Automation

Agents run scripts to validate data, perform calculations, or automate repetitive operational tasks that previously required manual intervention.

Document and Knowledge Access

Agents retrieve policies, contracts, technical documentation, or knowledge base content to support real-time decision-making.

Process Enablement

Agents trigger workflows such as ticket creation, status updates, or notifications based on conditions they evaluate autonomously.

These capabilities unlock real value, but only when designed with governance as the foundation, not an afterthought.

The Hidden Risks Nobody Mentions Until Production

Tool use introduces a risk surface that doesn’t exist in text-only systems.

Common enterprise failure modes that surface in production include:

Uncontrolled Permissions: Agents are granted broad access for convenience during development, leading to unintended data exposure or system changes that violate security policies or regulatory requirements.

Cascading Failures: One failed API call triggers incorrect assumptions downstream, which lead to further erroneous actions that compound the initial error and create complex debugging scenarios.

Silent Errors: Tools return partial or malformed responses that the agent misinterprets without validation, producing outputs that look correct but contain subtle inaccuracies that undermine trust.

Security and Compliance Violations: Agents access or act on data they should never touch, creating audit trails that fail regulatory review and expose organizations to compliance penalties.

Cost Explosions: Unbounded tool calls drive unexpected infrastructure or API usage costs that weren’t anticipated in budget planning and can’t be easily controlled after deployment.

These issues rarely appear in demos. They surface in production, often during the worst possible moments: audits, high-stakes transactions, or customer-facing failures.

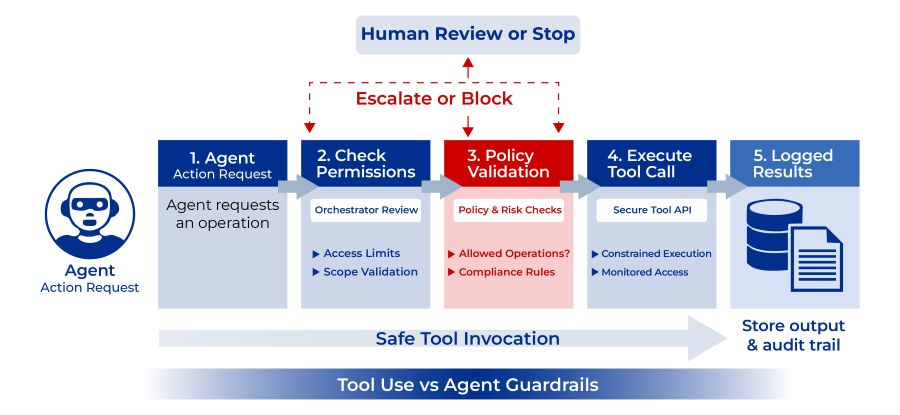

Why Tool Governance Is Not Optional

A critical mistake organizations make is treating tool use as a technical integration problem rather than a governance problem.

In enterprise systems, the question is not “Can the agent call this API?”

The real questions are: When should it? Under what conditions? With what permissions? How often? Who is accountable for the outcome? How is the action audited?

Forrester’s AEGIS (Agentic AI Enterprise Guardrails for Information Security) framework, introduced in 2025, addresses exactly this challenge. The framework emphasizes that enterprises must implement comprehensive security controls across six critical domains: governance/risk/compliance, identity and access management, data security and privacy, application security, threat management, and Zero Trust architecture.

The framework’s “least agency” principle stands out: give agents minimum authority and make those permissions temporary. Ephemeral identities for autonomous systems. This directly counters the convenience-driven approach most organizations take during pilots.

Without answers to fundamental governance questions, tool-enabled agents become unpredictable operators inside mission-critical systems. Unpredictability in production systems is risk exposure, not innovation.

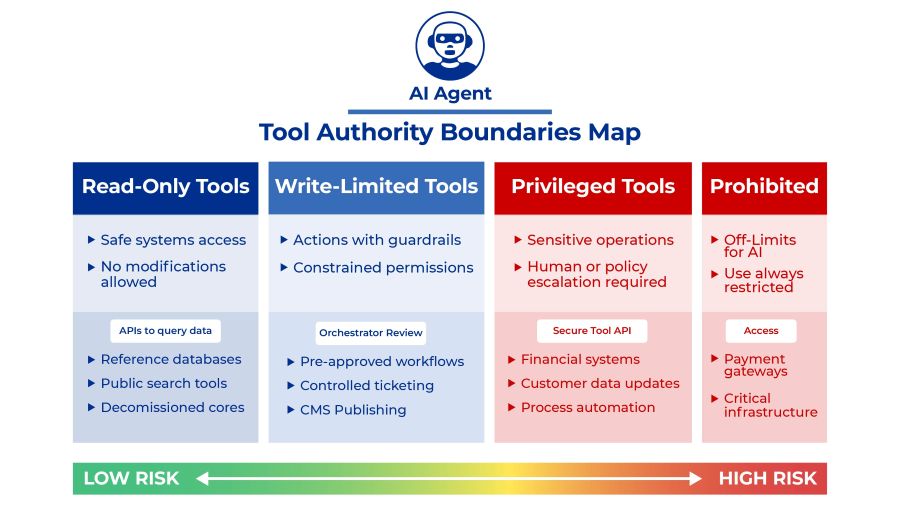

How Tool Control Matters More Than Tool Choice

Enterprises often focus heavily on which tools to expose to agents. Should we give access to Salesforce? What about our financial systems? Can agents query production databases? In practice, how tools are controlled matters more than which tools exist.

Gartner’s December 2025 research on AI-driven governance emphasizes that AI-driven changes necessitate a comprehensive governance reset, merging structured and unstructured data governance, emphasizing automation, and adopting zero-trust principles for AI-generated data risks.

Effective tool governance includes:

- Scoped permissions that limit agent access to exactly what’s needed for specific tasks.

- Clear input and output schemas that define acceptable parameters and expected responses.

- Rate limiting that prevents runaway execution and cost overruns.

- Error handling and retries that manage failures gracefully without cascading.

- Logging and observability that create audit trails for every tool invocation.

- Explicit success and failure conditions that determine when actions complete successfully.

These controls determine whether tool use increases reliability or introduces chaos. Organizations that implement them see measurable improvements in agent performance and risk management. Organizations that skip them discover the cost of that decision during incident response.

Tool Use vs Traditional Automation

Traditional automation follows predefined rules. Tool-using agents make decisions dynamically.

This distinction matters because agents may choose unexpected sequences of actions, context influences behavior in ways that aren’t fully predictable, and edge cases multiply as agents encounter real-world variability.

Enterprise systems must assume that agents will encounter scenarios designers didn’t anticipate. Tool use patterns must be resilient to that reality. This requires different thinking than traditional workflow automation, where paths are fixed and outcomes deterministic.

McKinsey’s research shows that only 6% of organizations are “AI high performers” who have successfully scaled AI to capture enterprise-level value. What separates them is not more sophisticated AI models. It’s the operational discipline to redesign workflows, implement governance frameworks, and treat AI deployment as organizational transformation rather than technology insertion.

How Tool Use Fits into Production-Ready Systems

In mature architectures, tool use is rarely autonomous. It’s almost always paired with orchestration to decide when tools are allowed, evaluation to validate tool outputs, memory to preserve context across calls, human-in-the-loop controls for high-impact actions, and event handling to respond to failures or anomalies.

Gartner’s prediction that 40% of enterprise applications will be integrated with task-specific AI agents by the end of 2026 suggests that organizations successfully scaling tool use are those embedding it within comprehensive governance frameworks, not treating it as standalone capability.

Tool use becomes a controlled capability within a broader system, not an unchecked power. This is the architectural reality that separates pilots from production.

When Tool Use Is Strategic (And When It’s Dangerous)

Tool use works best when real-time or authoritative data is required, actions must integrate with existing systems, precision matters more than creativity, and outcomes can be validated against known standards.

Tool use is risky when permissions cannot be tightly scoped, outputs cannot be verified through independent means, failures have high downstream impact on business operations or compliance, and governance and observability are lacking or implemented as afterthoughts.

Understanding this boundary is essential before expanding tool access. The organizations succeeding with agentic AI in 2026 are those who understand this distinction and design accordingly.

What QAT Global Has Learned About Tool Use

At QAT Global, we treat tool use as an architectural decision, not a convenience feature.

Our approach emphasizes minimal necessary access (no agent gets broader permissions than absolutely required), explicit contracts between agents and tools (inputs, outputs, and failure modes documented and enforced), built-in failure handling (graceful degradation instead of cascading errors), clear ownership and auditability (every tool invocation traceable to business context), and human oversight where impact is high (automated execution with manual approval gates for critical actions).

Tool use should make systems more reliable, not more fragile. We’ve seen too many organizations grant agents broad tool access during pilots only to discover in production that they’ve created security exposures, compliance violations, and operational fragility they can’t easily remediate.

When agents interact with enterprise systems, governance is not optional. It’s the foundation. Organizations that architect with this understanding from day one avoid the painful rewrites that come from treating tool use as a simple API integration problem.

What Comes Next

Tool use gives agents operational power. The next challenge is understanding how they reason about using that power.

In the next article in this series, we explore the ReAct (Reason and Act) Pattern: how agents alternate between thinking and doing, why this makes them more adaptable in uncertain environments, and what enterprises must do to keep that adaptability under control.

Understanding ReAct is critical for building AI systems that can operate in complex, real-world environments without becoming unpredictable sources of operational risk.

This is important because once your agents can both reason about the world and act on it through tools, you’re no longer deploying automation. You’re deploying autonomous systems. Autonomous systems demand governance frameworks that most enterprises haven’t yet built.

Ready to build AI systems that survive production, not just demos? QAT Global’s AI-augmented development services transform tool-enabled agents from risky experiments into governed capabilities. We combine strategic pattern selection with custom engineering to implement scoped permissions, audit trails, error handling, and the control layers enterprises require. The result: AI that delivers ROI without creating compliance nightmares. https://qat.com/contact/