The Reflection Pattern

Here’s what nobody tells you about agentic AI: the moment your team discovers reflection is often the same moment they overestimate what it can do.

Reflection (the ability for an AI agent to review and revise its own output before delivering a final result) represents a breakthrough in quality improvement. It transforms AI from a one-shot generator into an iterative contributor that can catch its own mistakes, improve clarity, and deliver more polished results.

But in enterprise environments, reflection alone isn’t enough. Organizations learning this the hard way are discovering that what looks like a quality improvement breakthrough at small scale becomes a compliance liability at production scale.

If your AI outputs are getting better but still failing audits, or if your agents produce work that looks polished but breaks under scrutiny, reflection is likely part of your solution (and only part of it).

What the Reflection Pattern Actually Does

The reflection pattern enables an AI agent to evaluate its own output and revise it before presenting a final result. This is accomplished through a procedural and cognitive shift between generation and review, but not an epistemic separation. The agent remains constrained by the same training data, assumptions, and latent biases, meaning reflection cannot be treated as independent verification.

Instead of treating the first response as complete, the agent follows a simple cycle:

Generate an initial output internally → Critique that output against specific criteria → Revise based on the critique → Repeat if necessary

This mirrors how humans work. We rarely produce our best work in a single pass. We draft, review, and refine. Reflection brings that same iterative discipline into AI workflows.

It’s elegant, powerful, and deceptively simple, which is exactly why so many organizations deploy it without understanding its limitations.

Why Reflection Works So Well (When It Works)

Reflection works because it forces separation between generation and evaluation.

When an agent generates content, it’s focused on producing something plausible and complete. When it reflects, it shifts into a different mode: one that looks for gaps in reasoning, inconsistencies, ambiguity, poor structure, tone mismatches, and missing requirements.

This change in perspective alone often surfaces issues that were invisible in the initial pass.

McKinsey’s 2025 State of AI report found that 64% of organizations say AI is enabling their innovation, with many reporting that iterative refinement patterns like reflection significantly improve output quality in software engineering and content generation workflows.

In practice, reflection reduces obvious errors, improves logical flow, produces clearer explanations, aligns tone with audience expectations, and increases perceived confidence in the output.

That’s why reflection is so widely adopted early in agentic systems. It delivers visible improvement with minimal additional infrastructure.

Where Enterprises Are Using Reflection Right Now

According to McKinsey’s November 2025 Global Survey on AI, 62% of organizations are at least experimenting with AI agents, with reflection patterns showing up across enterprise workflows where quality matters more than speed.

Content and Documentation

Agents draft reports, policies, or knowledge base articles, then review them for clarity, completeness, and tone before delivery.

Software Development

Reflection is used to review generated code for bugs, check adherence to coding standards, identify security concerns, and improve readability and maintainability.

Analysis and Research

Agents critique their own analyses by checking assumptions, validating logic, and identifying weak conclusions before presenting findings.

Customer Communication

Draft responses are reviewed to ensure they are accurate, empathetic, and aligned with brand voice before reaching customers.

In all of these cases, reflection improves results without requiring human intervention at every step. That efficiency gain makes it attractive. That same efficiency is also where the risk lives.

The Critical Limitation Nobody Mentions

Despite its strengths, reflection has an important limitation that becomes obvious only at scale. The agent is still judging its own work.

This means it may confidently reinforce incorrect assumptions. It cannot verify facts without external grounding and cannot enforce organizational policy unless explicitly instructed (and even then, only within the boundaries of its training). In addition, it may optimize for style over correctness.

Reflection improves quality, but it does not guarantee truth, compliance, or alignment with business rules.

In enterprise systems, that distinction isn’t academic; it’s operational. When organizations discover this limitation in production rather than in pilots, the cost is significant.

Why Reflection Fails at Enterprise Scale

Organizations often overestimate what reflection can do because early results are so promising.

At small scale, reflection feels like a breakthrough. Outputs improve noticeably, and teams gain confidence. At larger scale, cracks appear.

Common failure modes include:

- Confident but incorrect answers that pass internal review but fail external verification.

- Subtle policy violations that reflection doesn’t catch because the agent doesn’t truly understand the regulatory context.

- Inconsistent interpretations of standards across different business units or use cases.

- Outputs that look polished but fail audits because reflection optimized for appearance rather than correctness.

- Difficulty explaining why a decision was made, because reflection doesn’t create an audit trail that satisfies compliance requirements.

The root issue is that reflection is subjective unless paired with objective controls.

An agent cannot reliably enforce rules it doesn’t truly understand or verify against authoritative sources. In regulated industries such as financial services, healthcare, and government, subjective quality improvement isn’t enough.

Reflection vs Evaluation: The Distinction That Matters

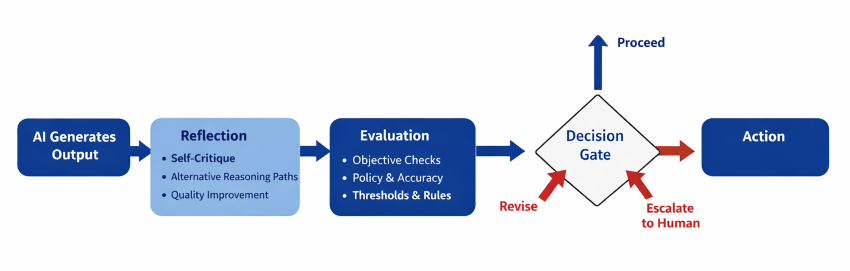

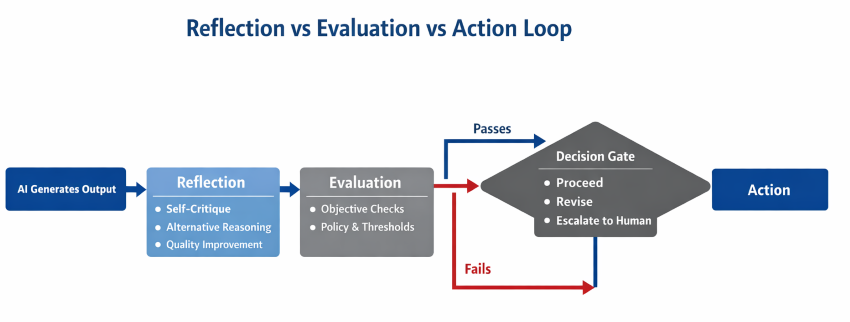

In production systems, reflection must be paired with evaluation. They serve different purposes.

Reflection asks: “How could this be better?”

Evaluation asks: “Does this meet defined requirements?”

Evaluation introduces external criteria, measurable standards, pass or fail thresholds, and business-aligned metrics that reflection alone cannot provide.

Without evaluation, reflection optimizes appearance rather than correctness. This is where many enterprise AI initiatives stall: not because the AI isn’t improving its outputs, but because it’s improving them against the wrong standards.

Forrester’s December 2025 research on agent control planes emphasizes that enterprises need out-of-band oversight that can enforce policy and maintain trust regardless of how or where agents are built and executed, noting that reflection alone cannot provide the governance layer enterprises require.

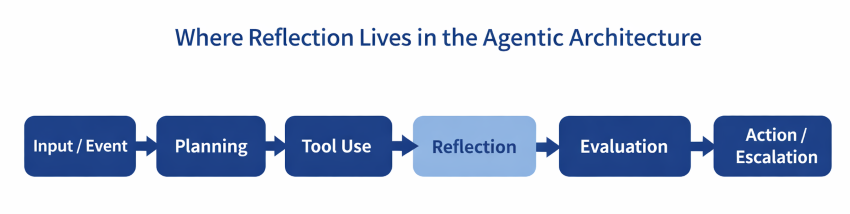

How Reflection Fits into Production-Ready Agentic Systems

In mature agentic architectures, reflection is rarely used alone.

It’s typically combined with tool use to verify facts or retrieve authoritative data, human-in-the-loop controls for high-risk outputs, evaluation patterns to enforce standards, and orchestration to decide when reflection is sufficient and when escalation is required.

Forrester’s research on adaptive process orchestration highlights that the orchestration plane enables teams to model processes, define routing and decision logic, and ensure that tasks, data, and actions move through a process in a controlled, observable sequence.

Reflection becomes one step in a governed workflow, not the final authority. Used this way, it delivers consistent value without creating false confidence.

Gartner’s August 2025 prediction that 40% of enterprise applications will be integrated with task-specific AI agents by the end of 2026 suggests that organizations successfully scaling agents are those combining reflection with comprehensive governance frameworks.

When Reflection Is the Right Pattern—And When It’s Not

Reflection works best when quality matters more than speed, outputs are subjective or interpretive, iteration adds clear value, and errors are low-risk.

Reflection is insufficient when accuracy is non-negotiable, decisions have regulatory impact, outputs must be traceable to sources, and policies must be enforced consistently.

Understanding this boundary is essential for enterprise adoption. It’s also the difference between AI that improves productivity and AI that creates compliance exposure.

Gartner’s June 2025 research found that over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value, or inadequate risk controls. Many of these failures stem from deploying reflection patterns without adequate governance layers.

The Guardian Agent Paradigm

Gartner’s June 2025 research on guardian agents addresses exactly this gap. As enterprises move towards complex multi-agent systems that communicate at breakneck speed, humans cannot keep up with the potential for errors and malicious activities, necessitating guardian agents which provide automated oversight, control, and security for AI applications and agents.

Guardian agents serve three primary roles:

- Reviewers that identify and review AI-generated output for accuracy and acceptable use.

- Monitors that observe and track AI actions for follow-up.

- Protectors that adjust or block AI actions during operations.

Gartner predicts that guardian agents will capture 10-15% of the agentic AI market by 2030. This just reinforces the enterprise recognition that reflection alone cannot provide the oversight production systems require.

What QAT Global Has Learned About Reflection

At QAT Global, we view reflection as a foundational capability, not a safeguard.

Reflection improves quality, but it does not replace governance, delivery discipline, human accountability, or system-level controls.

In the systems we design, reflection is intentionally paired with evaluation, orchestration, and human oversight to ensure AI behaves as a dependable copilot rather than an unchecked author.

The goal isn’t smarter AI responses. The goal is reliable AI systems that perform consistently under real-world conditions and survive regulatory scrutiny.

We’ve seen too many organizations deploy reflection patterns in production, only to discover later—during an audit, after a compliance incident, or when a high-stakes decision fails review—that iterative self-improvement is not the same as verifiable correctness.

The organizations winning with agentic AI in 2026 aren’t necessarily the ones with the most sophisticated reflection loops. They’re the ones who understand when reflection is sufficient and when it must be paired with external validation, policy enforcement, and human judgment.

As discussed in our overview of essential agentic workflow patterns, enterprise-ready AI is not built by perfecting individual patterns in isolation. Reflection is powerful, but it achieves its full value only when combined with complementary patterns that enforce standards, manage risk, and provide accountability beyond the agent itself.

What Comes Next

Reflection is often the first agentic workflow pattern teams adopt because the value is immediately visible.

But reflection alone only addresses output quality.

In the next article in this series, we’ll explore the Tool Use Pattern: how agents interact with APIs, systems, and data, and why tool access becomes one of the biggest enterprise risk surfaces if not designed correctly.

Understanding tool use is a critical step toward building AI that operates safely inside real business environments. Because once your agents can act on the world (not just reason about it), the stakes change entirely.

Reflection solved your quality problem.

Now solve your governance problem.

QAT Global’s AI strategists help clients determine which agentic patterns deliver value in your specific regulatory and operational context, then our custom engineering teams build systems that combine reflection with the evaluation and oversight patterns your business actually needs. https://qat.com/contact/

- The Reflection Pattern

- What the Reflection Pattern Actually Does

- Why Reflection Works So Well (When It Works)

- Where Enterprises Are Using Reflection Right Now

- The Critical Limitation Nobody Mentions

- Why Reflection Fails at Enterprise Scale

- Reflection vs Evaluation: The Distinction That Matters

- How Reflection Fits into Production-Ready Agentic Systems

- When Reflection Is the Right Pattern—And When It’s Not

- The Guardian Agent Paradigm

- What QAT Global Has Learned About Reflection

- What Comes Next