A Quick Story to Set the Stage

You’re navigating a new city with nothing but an old paper map. It’s detailed, sure—it shows every street, park, and landmark. But as you turn down Main Street, you suddenly hit a wall of honking cars and waving flags—a parade you didn’t know was happening. The map didn’t warn you. It couldn’t. You stop, reroute in frustration, and hope you’ll make it to your destination on time.

Now imagine the same trip, but this time you’ve got live GPS with real-time traffic updates. It knows about the parade, the construction detour, even the best coffee shop along your new route. You glide through side streets and arrive early—confident, informed, and stress-free.

That’s the difference between a traditional large language model (LLM) and one powered by Retrieval-Augmented Generation (RAG). LLMs are the map—rich in general knowledge but frozen in time, unable to adjust when the world changes. RAG is the live traffic feed: it injects up-to-date, specific information pulled from your own trusted sources, giving the model the context it needs to deliver accurate, relevant answers.

With RAG, you’re not just asking a model what it knows—you’re teaching it what you know, in real time.

What Is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation, or RAG, is a simple but powerful idea: combine the creativity of a large language model (LLM) with the precision of your organization’s own knowledge.

Traditional LLMs generate answers based on what they learned during training—a snapshot of the world frozen in time. That means they can “guess” well but can’t reference your internal data, recent updates, or domain-specific expertise. RAG fixes that by blending two key moves into one intelligent flow:

- Retrieve: The system first searches your trusted knowledge base—documents, policies, contracts, wikis, support tickets, research reports, or any internal data—and finds the most relevant snippets for your query.

- Generate: The model then uses those snippets to craft a response that’s both accurate and contextual, grounding every answer in real evidence.

Instead of relying on memory alone, a RAG-enabled model behaves like a sharp analyst who checks the source before speaking. It’s thoughtful, verifiable, and anchored in the facts you already own.

For enterprise leaders, that shift is transformative. RAG doesn’t just make AI smarter—it makes it trustworthy using your own internal documents and the credible source. Every response can be traced, audited, and explained, built on the foundation of your organization’s knowledge rather than on the model’s best guess.

In short, RAG turns generative AI from a clever storyteller into a dependable subject-matter expert—one that knows where its answers come from.

When to Bring in RAG (and When to Let it Rest)

RAG isn’t the right tool for every job—and that’s okay. Like knowing when to use GPS versus when to follow a landmark, it helps to understand when retrieval actually adds value.

RAG is a great fit when:

- Facts change fast. Think of industries where information shifts daily—pricing updates, product inventory, regulatory rules, or software release notes. RAG ensures your AI reflects the latest truth, not last month’s data.

- Answers must come from your own materials. If your employees, partners, or customers rely on information locked in handbooks, SOPs, contracts, or client files, RAG keeps those answers accurate and safely inside your organization’s walls.

- Accuracy isn’t optional. In regulated or high-stakes environments, you don’t just want a confident response—you want one you can trace and verify, complete with citations that link back to the source.

- Your document set is too large for a prompt. When you’ve got thousands of pages of policies, support tickets, or research, RAG retrieves only what’s relevant instead of trying to cram it all into the model at once.

You can skip RAG when:

- You’re asking for general knowledge. Questions like “Explain zero trust in simple terms” or “What is cloud computing?” don’t need retrieval—the model’s training data already has you covered.

- You want creative or open-ended ideas. For brainstorming, campaign slogans, or story writing, RAG can actually constrain creativity. Sometimes you just want the model’s imagination, not its homework.

For enterprise use, RAG draws the line between AI that’s confident and AI that’s correct—between fast answers and right answers.

How RAG Works Behind the Scenes

Under the hood, Retrieval-Augmented Generation runs on two tracks—one built in advance and one that happens in real time. Together, they turn your organizational knowledge into a living, searchable intelligence system.

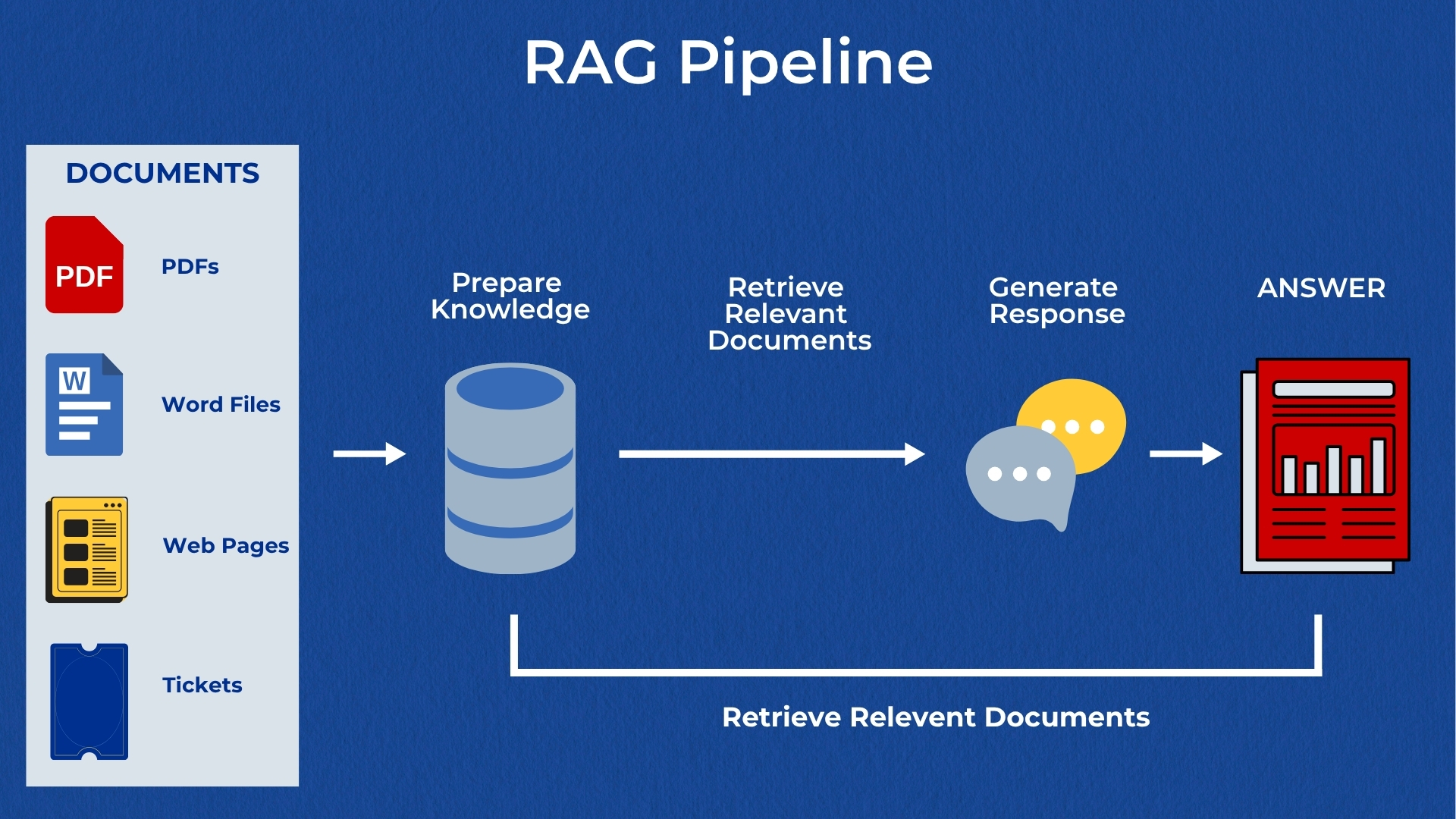

1. Prepare Your Knowledge (the groundwork)

Before RAG can answer questions, it needs to understand what your organization knows. That means:

- Gather your materials. PDFs, Word docs, webpages, support tickets, even database records—anywhere your institutional knowledge lives.

- Break them down. Long documents are split into smaller, meaningful chunks so the model can match them more precisely later.

- Fingerprint each piece. Every passage is converted into an embedding—a kind of numerical fingerprint that represents the meaning of the text, not just the words.

- Store and organize. These fingerprints, along with metadata (like the source file, page number, and permissions), are saved in a vector database, the “memory vault” the system searches through later.

This preparation phase turns your content into structured, searchable intelligence—ready to be retrieved on demand.

2. Answer Questions (the real-time magic)

Once the groundwork is laid, RAG gets to work answering questions:

- Interpret the query. The user’s question is turned into its own embedding—a fingerprint of meaning.

- Find the closest matches. The system searches the vector store for passages that most closely match the question’s intent.

- Generate the response. Those passages are passed to the LLM with instructions such as: “Answer using this material. Cite your sources. If the information isn’t found, say you don’t know.”

- Deliver with confidence. The result is a grounded answer that references the exact pages or documents it came from.

This architecture creates traceable, explainable AI—essential for enterprise use where transparency, accuracy, and governance matter.

With RAG, your organization’s knowledge isn’t just stored—it’s activated, becoming part of a secure, intelligent system that can reason over your own content in real time.

Key Takeaway: Why This Step Matters

RAG transforms data into decision-ready knowledge. By connecting your internal sources directly to your language model, every answer is grounded in fact, not assumption.

This approach gives enterprise leaders confidence that AI-driven insights are accurate, traceable, and aligned with real business context.

Understanding Embeddings: How AI Learns Meaning

Traditional keyword search looks for exact word matches—but people don’t talk that way.

“Send it back,” “refund,” and “returns within 30 days” all mean roughly the same thing, even though the words are different.

Embeddings solve this problem by translating text into a numerical form that captures meaning instead of just wording.

You can think of it like assigning every phrase a set of GPS coordinates in a multi-dimensional space. Phrases with similar meanings land close together, even if they use completely different language.

That’s how RAG finds the right passage—even if the question doesn’t match the document’s exact words.

For business leaders, embeddings unlock context-aware intelligence across all your enterprise data and customer knowledge bases—making search, insights, and automation far more human-like in understanding.

Building a RAG Solution: Decisions You’ll Make

Once you understand how RAG works, the next question is how to build it—and build it well. A production-ready RAG system isn’t just a clever demo; it’s an ecosystem that balances performance, governance, and cost.

Think of it like constructing a bridge: every design choice affects how strong, scalable, and safe it will be.

Here are the key decisions enterprise teams will face along the way:

- Audience and SLAs. Internal users might wait an extra second if the answer is bulletproof. Customers won’t. Define your expectations for latency and accuracy early.

- Content map. Identify what data sources matter most—policies, wikis, contracts, ticketing systems—and who owns them. Plan for updates, retention, and permission Iogs so your content stays both current and compliant.

- Security and governance. Apply access controls at retrieval time, log every query, and redact sensitive fields when needed. Governance isn’t an afterthought—it’s baked into the architecture.

- Model choices. Your large language model and embedding model don’t have to come from the same vendor. Mix and match for the right balance of cost, speed, and quality.

- Retrieval stack. Managed vector databases (like cloud-based options) are fast to deploy, while self-hosted setups give you more control. Choose based on your scale, compliance needs, and budget.

- Frameworks help tie ingestion, retrieval, and prompting together. Use them to accelerate development—but make sure you still understand what’s happening under the hood.

- Track what was retrieved, what was generated, and whether humans agreed with the answer. Those feedback loops are how your RAG system keeps learning and improving over time.

A well-designed RAG system doesn’t just answer questions—it becomes part of your organization’s knowledge fabric, continually learning, governing, and optimizing the flow of information across teams.

What RAG Can’t Do (and Why That’s a Good Thing)

After making all the right design decisions, it’s easy to expect RAG to solve everything. But even the smartest systems have limits—and knowing them is part of doing it right.

RAG won’t:

- Fix bad source material. If your documents are outdated, inconsistent, or incomplete, RAG will surface those flaws, not hide them. Garbage in still means garbage out—just faster.

- Eliminate hallucinations by magic. While RAG reduces the risk of the model making things up, it doesn’t erase it entirely. The best results come from combining retrieval with clear prompts, tight instructions, and proper guardrails.

- Replace business intelligence. If you’re looking for aggregated numbers, joins, or KPIs, query your database or semantic layer directly. RAG complements BI—it doesn’t replace it.

- Read minds. RAG still depends on how you ask. Precise, well-structured questions produce precise, well-grounded answers.

At its core, RAG is a multiplier—it amplifies good data hygiene, solid information architecture, and thoughtful governance.

It’s not a shortcut around them; it’s what rewards you for doing them well.

A Pragmatic Enterprise Rollout

RAG isn’t a single product—it’s an ecosystem that touches your data, processes, and people. Rolling it out successfully means thinking like an architect, not a magician: start small, measure, refine, and expand with purpose.

Here’s a practical path to doing it right:

- Start narrow. Begin with one department and one well-defined content set—like HR policies, IT support knowledge bases, or product documentation. Success in one focused area builds trust and momentum.

- Define success early. Identify a handful of metrics that matter—accuracy rate, response time, user satisfaction, or reduction in helpdesk queries—and measure them consistently.

- Close the loop. Let users flag wrong or incomplete answers. Feed that feedback into retraining, better document chunking, or source cleanup. Every correction strengthens the system.

- Scale out thoughtfully. Once you’re consistently hitting your targets, expand to new departments, content collections, or languages. Growth should be steady and supported—not rushed.

RAG Costs, Risks, and Controls

Rolling out RAG isn’t just about building—it’s about balancing investment with oversight.

Here’s what to plan for:

Costs. Expect spending in three main areas:

- Model usage – the cost of running your language and embedding models.

- Storage and search – maintaining embeddings in your vector database.

- People time – for ingestion, quality control, and governance.

Pilot projects are typically far less expensive than full re-platform efforts, yet they deliver immediate insight into value.

Risks. The biggest risks are data leakage, stale or outdated answers, and misplaced trust in citations that look authoritative but aren’t.

Controls. Manage these risks with enterprise-grade safeguards:

- Enforce role-based access at retrieval time so users only see what they should.

- Set up automated freshness checks to flag aging content.

- Use clear citation formats so sources are traceable.

- Conduct regular red teaming of prompts to test for vulnerabilities and bias.

A thoughtful rollout keeps innovation grounded in responsibility—turning RAG from an experiment into a dependable business capability.

The Enterprise Advantage: Turning Knowledge into an Edge

By this point, you’ve seen how RAG works and what it takes to deploy it responsibly. Once in place, it becomes something far more powerful than a technical upgrade—it becomes your organization’s navigation system for knowledge.

Remember that paper map from the beginning? RAG is the real-time traffic layer your enterprise has been missing. It takes the static knowledge you already own and turns it into a living, breathing network of insights that adapts as your business moves.

In most organizations, valuable information lives everywhere—across PDFs, SharePoint folders, Slack threads, ticketing systems, and databases. It’s scattered, duplicated, and hard to access when you need it most. That’s where RAG changes the game.

RAG doesn’t just make AI smarter—it makes your knowledge actionable. By connecting large language models directly to your own data, it transforms static documents into dynamic intelligence that informs decisions, speeds up work, and reduces risk.

Here’s what that looks like in practice:

- Smarter decision-making. Teams can ask natural questions—“What are the current pricing terms for this client?” or “What’s our policy on remote work equipment?”—and get instant, sourced answers drawn from the right documents.

- Faster, safer workflows. Instead of searching through folders or sending follow-up emails for clarification, employees can query an AI assistant that’s grounded in the latest company policies—saving hours while ensuring consistency.

- Compliance and governance built in. Because RAG cites its sources, every response is traceable. That means you can audit the evidence behind any answer—crucial for industries where accuracy and transparency aren’t optional.

- Scaling institutional knowledge. As organizations grow, information tends to fragment. RAG helps unify that knowledge and make it discoverable for everyone—from new hires to executives—without compromising security.

In short, RAG turns information overload into insight on demand. It helps enterprises move beyond AI that talks to AI that truly knows—grounded, reliable, and always ready with the right answer.

And when it’s built with care—balancing quality, agility, and transparency—it becomes exactly what every enterprise needs in today’s landscape: a trusted guide that keeps you moving forward, no matter how often the route changes.

Conclusion: Navigating the Future with Confidence

The road to enterprise AI doesn’t have to be uncertain. With Retrieval-Augmented Generation, organizations can move from static knowledge to living intelligence—where every decision is guided by current, trusted information.

Like switching from an old paper map to live traffic, RAG gives your business the context it needs to navigate complexity with confidence. It brings clarity to chaos, precision to process, and trust to every answer.

At QAT Global, we believe the real power of AI isn’t in replacing people—it’s in empowering them with better tools and better data. Our approach to RAG is grounded in what defines us: Quality, Agility, and Transparency. We help enterprises build solutions that are not only smart, but right—because your success is our mission.

Executive Takeaways

Retrieval-Augmented Generation makes AI truly useful on your proprietary knowledge—without handing that data to the world. It strengthens trust by showing its work: every answer is grounded in your own content and backed by verifiable sources.

Start small, measure outcomes, and iterate. The organizations that win with RAG are the ones that pair clean, well-managed content and clear guardrails with a continuous feedback loop for improvement.

Think of it as upgrading from a static map to a dynamic navigation system—one that knows your roads, understands your rules, and gets smarter with every mile.

Partnering with QAT Global to Implement RAG

Turning RAG from a concept into a dependable enterprise capability takes more than the right model—it takes the right architecture, governance, and delivery discipline.

At QAT Global, we help organizations design and deploy secure, production-grade RAG systems built on Microsoft Azure and modern MLOps frameworks. Our teams ensure your AI draws from the right data, with full observability, strong compliance controls, and traceable results at every step.

If you’re exploring how to:

- Ground large language models in private, permissioned data

- Build a scalable retrieval pipeline with compliance and governance in mind

- Integrate RAG seamlessly into your existing software ecosystem

At QAT Global, your success is our mission.

- A Quick Story to Set the Stage

- What Is Retrieval-Augmented Generation (RAG)?

- When to Bring in RAG (and When to Let it Rest)

- How RAG Works Behind the Scenes

- Understanding Embeddings: How AI Learns Meaning

- Building a RAG Solution: Decisions You’ll Make

- What RAG Can’t Do (and Why That’s a Good Thing)

- A Pragmatic Enterprise Rollout

- RAG Costs, Risks, and Controls

- The Enterprise Advantage: Turning Knowledge into an Edge

- Conclusion: Navigating the Future with Confidence

- Executive Takeaways

- Partnering with QAT Global to Implement RAG